Recently, a lot has been made of a post gone viral, someone claiming that GPT is beaten in chess by a half century old Atari 2600 using a chess cartridge.

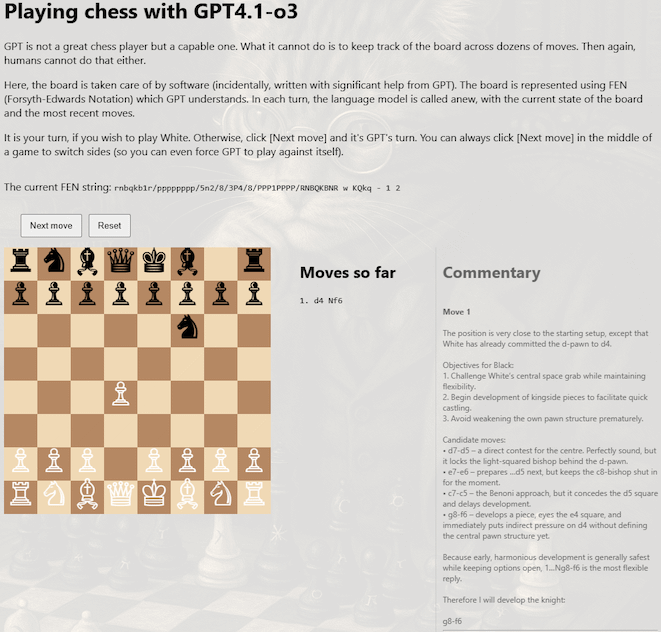

This claim was fundamentally based on a misunderstanding of how language models really work. You cannot expect a language model to keep track of the chessboard internally ("in its head") across multiple turns. You can, however, expect an advanced language model to propose legal, moderately competent moves at each turn so long as it is informed of the board's current state. To this effect, I created a trial implementation, which is presented here.

To play, well, just play.